Process

Process is something that OS creates when it executes one program. Perhaps an analogy will help to realize the concept of process.

Mary is a housewife that planned to do an orange cake. She picked up her cookbook and took from it the cooking recipe for an orange cake. It’s like she went to HD get the file Orange Cake, which corresponds to the program (or cooking recipe) of how to make an orange cake. It’s a simple recipe, or a program, the way to do an orange cake.

But, when Mary starts executing the cooking recipe, she uses the available resources in her kitchen (bowls, pyrex, mixers, ovens, dishwashers, etc.) to do it. And the cooking recipe is slowly becoming what its goal is, the orange cake.

For the cooking recipe (program) till the cake, we are precisely in the process of confection of the orange cake.

To the process of confection doesn’t matter the language in which the recipe was written (Portuguese, English, Mandarin, or other) as well as the type, features, brand or model of equipment on which it is being executed. Just matter the ingredients, their quantities, how they are mixed and cooking time and temperature.

Therefore to the process does not concern the language in which the recipe was written and the machine it runs on.

Thus, the definition of process will be:

Process is what executes the goal of a program. It is the abstraction of program execution created by the OS, oblivious to the characteristics of the machine where it executes and to the language in which it was programmed.

The characterization of a process is made up of various components, of which we highlight:

- The PID (Process ID) which identifies each process with a different number.

- The degree of priority.

- An address space.

- A state at every moment.

- One user.

The priority is set for each process according to its importance.

But how do you define the importance of a process? It’s is fancy name, its status, its money, or what else?

Calm down. Although the computer has been created by man, the behaviors and attitudes that characterize its creator didn’t affect it. Don’t be nervous.

The importance of a process has to do with the fact that it is triggered as a response to an external stimulus, with greater or lesser need for fast execution, or with the fact that the user associated with the same has special privileges.

The priority of a process is reversed to its request for CPU, i.e. a scientific calculation program which permanently needs CPU while running, has lower priority than a word processor, which only needs CPU every time a key is pressed.

Under these assumptions, OS assigns the process a priority level which it will over time according to its behavior.

The address space is a space located in memory which is exclusive of a process, where its executable file (instructions) and data will be placed. This space has specific characteristics in how its content is designed and tidy, but well talk about this subject latter.

A process has at every moment of its execution a state, defined by the contents of its address space, the contents of CPU registers, the instruction pointer, the stack pointer (latter we’ll see what this is), the CPU status register when executing this program and also for system objects that are interacting with it (files, peripherals, etc.).

Each process is associated with a user, to whom the OS grants the privileges assigned to it in the file system for the executable file, according to which the process priority and the contexts in which it can perform can be influenced.

Address Space

Since programs are files, when you need to run a program it’s the OS that handles it by putting that program into memory, so that CPU can run it in its cycle of search and execution. As we have seen, CPU executes only what is in memory. Thus, for the process to begin it is necessary to define its address space and place there its binary executable code and its different data structures.

As shown in Figure 1 the address space of a process has several spaces, each one with its own characteristics, which are:

- Stack

- Heap

- Data

- Code

Data is the area of address space where data defined in program code is placed, such as constants and global variables, for example.

Code, also called text, is the part of address space where the binary code corresponding to the executable program is placed.

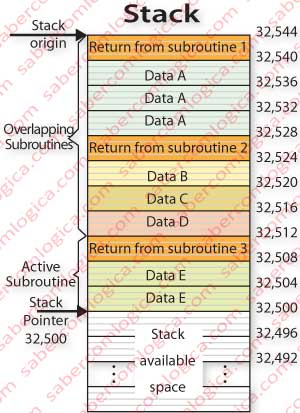

Stack

Stack is the location in the address space where data that the process will get precisely by the reverse order it is stacked (as the name states). Data is placed using the LIFO (Last In First Out) policy, i.e. the first data being required by the process is always the last one entered.

Stack’s origin is its higher address and stack’s top is its lowest referenced address. Stack loads downwards and discharges upwards, when referring descending and ascending addresses.

Data placed on the stack has no specific address for it. Stack has a single pointer, the stack pointer, a CPU register that points to the last referred byte cell on stack. When stack is empty, pointer’s contained address points to stack’s origin.

It is possible for the developer to use the stack through push (place in stack) and pop (get from stack) instructions, which always refer to the top of the stack.

- If a push instruction is used the desired data bytes are written into the positions below the one referenced by the pointer and the value of pointer contained address is subtracted from the number of bytes written, which will remain pointing to the last referenced cell.

- If a pop instruction is used, the number of bytes corresponding to the desired data is read from the address indicated by the pointer in ascending order and the value of the pointer contained address is increased by the amount of bytes read, which will remain pointing to the last referenced cell.

The data that overlap the subroutine return addresses must be related to local values, as these are deleted when the subroutine returns. Any variables that are written in the stack must always be accessed in reverse order to the one they were written. There is no way for the developer of referencing a given stack position by its address. Writing and reading any data on the stack will always be done by reference to the stack pointer.

It’s important to ensure that data written to the stack within a subroutine scope is popped before the return address of that subroutine is accessed. If not, when the subroutine return address is read in its place will be one other value that will be taken as the return address, causing a program error and aborting its execution.

Figure 2 illustrates the described operation of the stack. For ease of reference we assume that data will always have to write the size of a 32 bit word (4 bytes). So the cells are always represented in sets of 4 bytes and addresses jump from 4 to 4.

Cells in orange are the return addresses of the various levels of subroutines. On top of these addresses the program pushes local data. When a new subroutine return address is pushed before the first subroutine return address is popped from stack is because the new subroutine was called from within the active subroutine in stack, now inactive. In the referred figure we have 3 subroutine levels, being active the 3rd, the first two being inactive. Each one was called from within the former one.

For instance, when the program calls subroutine 1, its return address, a 32 bit word (4 bytes) is written in the stack and the address value pointed by stack pointer is subtracted from 4 (the number of bytes written) now pointing to address 32544 – 4 = 32540, last referenced byte’s address.

When the first subroutine data is written, 12 bytes are pushed to stack and the stack pointer is subtracted of 12, now pointing to address 32528, last referenced byte’s address.

When D data is written in the stack within the second subroutine is via a push instruction. At next pop this data will be read, or rather the four bytes that make it up. In this situation pointer’s contained address is added by 4, pointing now to address 32516, the end of C data.

When stack pointer points to the byte with address 32528, a pop to stack reads data A, and if everything still goes well and under control, therefore requests 12 bytes. The pointer will be increased and its value will point to the end of the return address of subroutine 1, the position 32540.

When the program returns from that subroutine, the stack will be empty and the pointer will point to stack origin, the address 32544.

The stack is a dynamic space that grows as the complexity and depth in the program subroutines deepens and decreases when the reverse happens.

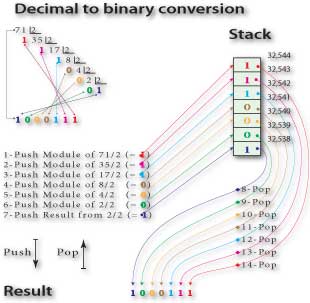

Let’s see a small example of how the stack can be used in a program, the conversion of a decimal to binary number, shown in Figure 3.

As we know, the conversion of a decimal number to a binary number is done by successively dividing the decimal number by two. The rest of the various operations will be part of the composition of the binary number to meet. When the result is less than 2, the result itself will also be part of this composition, being found all the binary digits.

But the representation of the binary is done by placing the digits in reverse order from the one they were found. So we must invert the order of the various values found in the successive divisions.

Won’t it be a lot easier and faster if we use stack to help us?

Of course it is. It suffices to successively push the results as they are found. They will be stored on the stack in descending order, byte to byte (in this case each data occupies one byte) from its origin to its top. When we pop these data from stack, we get them in the reverse order they were written, i.e. the correct order of digits for the number binary representation, because pop is done from stack’s top to origin. We no longer need to flip them.

Heap

Heap is the location of the address space where data is placed at random or stacked. Any data block on the heap always has a pointer to the start address of that block. The allocation is done automatically by the compiler, but in certain high-level languages like C e.g., this allocation of space for any data structure can be made by the programmer, who must always be careful to release that space when no longer required.

Heap is a dynamic data structure, having a variable size depending on the needs of the program. Heap grows in an opposite direction of stack and they both grow into the address space of the process, which should be sufficient to hold their dynamic needs. Heap is where runtime data is placed for the CPU to use it.

While C language allows the allocation of memory space for its data structures by the developer through special instructions (e.g. malloc), other languages such as JAVA e.g., reserve themselves the whole handling of memory, to create and release the space needed at runtime.

This is a sensitive issue in programming languages through which some important virus attacks can be realized. That is even the reason why some important high level languages reserve themselves memory management.

Users

There’s always a user associated with an OS executing on any system. Potential users of a system are first defined in the OS that manages that system. When turning on the computer, user authentication is done by the OS.

In order to be authenticated, the user provides the OS a set of characters that match the user’s name or username. The OS replies with a challenge, asking him to send it another set of characters that only it and the user know, which is the password. If all elements are correct the authentication procedure is finished and the user is associated with this system by the OS.

When a user tries to access any file, an executable to start a process for instance, the OS will proceed with the user authorization. The privileges of the different possible users and groups for each file are defined in the file system. Based on the type of permissions that are assigned to each user and each group, the user under authorization will or not be authorized to execute the file he wants.

We emphasize the importance of understanding the difference between Authentication and Authorization, two aspects that matter a lot in security issues, which most certainly we will come to refer to.

The States of a Process

There are three possible states for each process:

- Running, is the state of a process using the CPU.

- Blocked, is the state of a process to which CPU access was withdrawn because it is still awaiting information requested from external devices. Therefore, even with CPU access it could not have any development.

- Executable, is the state of a process that is able to use CPU when it will be granted to it. It’s in the queue waiting for its time.

Multiprocessing

Our old friend Mary found its kitchen was lots of time unused during her cooking jobs. To recover the money that she spent on kitchen’s equipment, she decided she would cook other people’s recipes on demand during the periods she wasn’t working on her ones.

She quickly realized that while the cooking processes were on the stove or oven she was not doing anything (maybe crochet, but not cooking) and that she could take that time to go ahead in other areas with unoccupied equipment. In such a way, even the stove and the oven began to be used during a lot more time.

Mary was very pleased, because she realized that with the same equipment she could cook more recipes, therefore producing more, processing more recipes in the same space of time. It was the poor crochet that was delaying, but crisis is the crisis and crochet doesn’t fill the belly.

Mary was multiprocessing recipes in her kitchen. Mary was the OS, her kitchen the CPU and her kitchen’s equipment the peripherals. When a process was long running on a peripheral (e.g. oven) Mary removed its recipe from the kitchen (obviously leaving the oven directly dealing with its task), registering the state of its execution when removed and placed another recipe processing in her kitchen with other faster peripherals.

When the oven beeps, it means it’s calling her attention to the fact that the process running in it is ready and needs her attention.

Then Mary suspends the recipe that is processing, removes it from the kitchen registering the state of its execution, and continues with the first one, resuming it in the exactly state it was left and recorded in her memory.

On a computer, for the same reasons (performance and profitability of the hardware), is set the same.

On a computer there are always multiple processes running simultaneously. This fact is called multiprocessing. Multiprocessing management is one of the most complex and interesting jobs from an OS.

But if CPU executes only one process at a time, how is that possible?

And it’s true. The CPU only runs a certain program, or rather a process, at each moment. But OS, which makes an efficient management of CPU’s time, will be permanently giving it different processes to run, i.e. switching between programs running on CPU.

Almost all of those who are reading this certainly had more than one program running simultaneously on its computer, for instance, a word processor, an imaging program, an e-mail program, a program for Internet access (Browser), a video program, an Antivirus. And they are all running simultaneously (not at the same time). We referred only those that we have put into execution, because behind the scenes, there are dozens of programs running at system’s service, the so-called system processes.

Why to refer, simultaneously (not at the same time)?

In reality, all they are processes, i.e. programs under execution, so they are all simultaneously programs under execution not executing on CPU, where at each moment (at the same time) only one can be.

The fact is that CPU executes around three billion instructions per second, what allows OS to switch as many processes as many times it needs in one second without we even realize it, so getting the feeling that all of them are running at the same time.

When we are watching an HD video or play one of the most modern 3D games, this still remains true, because behind the scenes, the OS did not forget that the computer needs its attention and the ongoing execution of programs that ensure its proper operation and safety.

We can even see that movie while the antivirus does a full scan of your computer and μTorrent (e.g.) makes a long file download. The film runs as if nothing else beside it goes on the computer (this statement can obviously be not true, according to the characteristics of the system under use).

As mentioned above, and let’s face it for granted, it’s the OS that takes or gives CPU access to a process. It is in charge, it is the boss. Let’s now understand under what assumptions does OS carry out its dictatorial power, analyzing some situations that may lead to process switching in CPU.

Whenever a process is waiting for data requested to peripherals (memory, disk, or other peripherals), the OS may withdraw the right to CPU to that process and assign it to another, which in the meanwhile was queued for execution. This is easy to understand if we think that such a request can take from 40 nanoseconds in MM (Main Memory), to 5 milliseconds to HD (Hard Disk) up indefinitely in the case of a keyboard, for instance.

Have you noticed by chance the time it takes between pressing two keys in a keyboard? It seems insignificant doesn’t it?

Given that the CPU can execute around 3 billion instructions per second and that an expert can take one second to press 3 keys when writing, in the period between the introductions of the value of two keys CPU can execute 1 billion instructions. Therefore, in the perspective of CPU that is an eternity. CPU should say: “The huge amount of jobs I could do while standing still if I’m given work to do.”

Whenever a process exceeds a given period of time that is called Quantum, defined as the maximum consecutive amount of time during which a process can use CPU, its CPU access right is withdrawn and assigned to another process that is in queue. This procedure is easy to understand because otherwise it would be possible for a process that is performing complex scientific calculations (e.g.) based on data in cache, to indefinitely hold CPU, halting all other processes and therefore the computer itself.

Whenever a process with priority requests the CPU, the OS can withdraw the right to access CPU from the running process and grant it to the requester. Imagine that CPU is running a scientific calculation program and an HD access is asked. The OS takes out the CPU from the first process, begins HD access process and immediately grants the right to access CPU again to the process who was running, while waiting for the MMU (Memory Management Unit) to return the requested data. Likewise, when a key is pressed, the OS takes the CPU access from the ongoing process, reads the value of key pressed and delivers CPU access again to the first process.

Operating Systems Organization

OS are organized into three key areas:

- The Kernel

- The System calls library.

- The System processes.

They can run in two modes:

- The User mode and

- The Kernel mode.

The Kernel is a maximum security area of a computer to which only the system has access.

In User mode, OS only have access to the address space defined for the process that the user is running.

In Kernel mode, OS have access to their own address space and all processes address space, without any restrictions.

It’s easy to understand why Kernel area is so exclusive. Anyone who could, on its own, run the Kernel management routines, would have access to the entire computer and do whatever he wants.

The way to call OS Kernel routines to perform is unique and consists of interrupts or exceptions, situations managed by the CPU itself, properly identified and sent to the OS.

When an interrupt is signaled to CPU it switches the running mode pin (bit) from User to Kernel and sends the Interrupt ID to the OS, which executes the routine corresponding to the identified interrupt. Once executed the running mode pin is again reset in User mode. To this procedure is given the name of System Call.

The routines for handling exceptions or interrupts are not reachable otherwise, i.e. cannot be invoked by any user that doesn’t know their addresses or even how to call them. Only the system is allowed to access them the way just described.

So being, what are system processes? Those are not hiding. We can see their names in task manager.

System calls are costly in performance. Therefore, when they can be avoided, the system creates processes that it owns. This happens with processes required by the OS for it to run which normally boot with the system, keep running from there on and do not imply security problems for the OS, because they perform outside the kernel.

This is the case among many others, of the indexing file system, the authentication system, network listening services, etc. If you have Windows on your computer, open Task Manager window by clicking the right mouse button over the task bar and choosing that option. Then open the processes tab and select show processes from all users.

You’ll have the opportunity to check several things:

- The number of processes that are running on your computer.

- Processes owned by the System, which we have just referred.

- Processes’ description, if you are that curious for it.

- Through inactive system process, see the percentage of CPU capability you are using at each moment.

With this new concept acquired, we can now talk about interrupts and exceptions.

How does OS know that a device wants to communicate with a process or that a process already completed its job with a peripheral?

Interrupts and Exceptions

As in the case Mary’s oven, also at CPU a beep corresponding to an interrupt signal (one bit) is triggered, taking CPU attention.

In order to do this, CPU has pins whose state is defined by one bit each. Say that the state of interruptions is defined by one or more registers, wherein each D flip-flop’s bit represents the status of a pin and matches an IRQ (Interrupt ReQuest).

Switching a pin state (active when set) means that there is an IRQ from the peripheral it represents or an exception associated with that IRQ.

Although OS commands all the operations in a computer, CPU remains the one who actually gives directions for the OS to operate and under what circumstances.

Each IRQ has a specific identification, consisting of one byte identifying it contained in an OS table where it is related to a system call, so that OS can invoke the appropriate routine.

Switching process at CPU is also performed the same way as a result of a particular IRQ. Over Quantum switching itself also results from an IRQ.

Did you know that the CPU also has tics?

Well, as it has been made by humans, it also includes a tic. At regular intervals, the CPU blinks and the OS is aware of this.

The tick, which we are now going to call by its correct name, Clock Tick, is an interval corresponding to the number of clock cycles required to complete a value in the order of tens to hundreds of milliseconds, differing between systems. When the OS sets the Quantum, it does it in number of clock ticks. When this value is reached by a running process, an IRQ is triggered.

For any IRQ that causes process switching on CPU, the OS invokes a routine called Dispatch, whose function is to examine the IRQ, check if instant switching is justified and, if so, proceed accordingly.

In case of processes switch, the entire process execution context within the CPU is saved into memory:

- Active registers (which may be on the order of tens, contrary to what we have seen in the analysis we made of CPU, where we considered only a register)

- Instruction Pointer.

- Stack Pointer.

- State register.

As a matter of safety, these values are not stored on the stack of the process address space. For the data of this process it’s created a stack in the OS kernel, where it is protected against any unauthorized access.

When the process returns to the CPU for execution, these values are taken from that kernel stack and placed in its appropriate locations in CPU, thus changing its execution context to the corresponding process, which will continue to perform precisely in the state it was.

For Dispatch routine to make a decision about the process to put running on CPU, OS has another routine that goes by the name of Scheduling, whose function is to keep an ordered list of processes whose state is executable. This list is sorted by priority of execution, is a waiting list and is being constantly updated by scheduling routine.

Quantum was created in order to limit the granting of CPU to a process at a maximum period of time, thus avoiding a process of intensive CPU use to appropriate of it indefinitely, if in the meanwhile there isn’t an IRQ.

However, the opposite was not neglected, having particular care on providing more CPU time to processes that use it intensively. Thus, processes’ Quantum can be different. If so, it will be higher for processes with lower priority (using the most CPU intensive) than for the higher-priority (with less intensive use of the CPU).

In addition to this procedure, Dispatch routine also has the option, in its analysis prior to process switching, to decide whether or not doing it immediately or give more CPU time to running process, according to the percentage of the process Quantum executed.

As we can see, there is a continuous interaction between the OS and the hardware, in this case the CPU, which continues to be the commander of operations but subject to the criteria of the OS. This implies that the OS identifies and meets perfectly the CPU of the system that is managing.

The management of interrupts and exceptions and process switching are functions of greater complexity in OS. We think we have already mentioned the key to its knowledge. We don’t want to go further because, beside this is not the purpose of this study, we would risk generating huge confusion at this stage.