What is a CPU

The CPU, acronym for Central Processing Unit, is the computer brain. It is the computer component that turns it different from a washing machine or from a single calculator.

And what is the reason for this difference?

The CPU is an integrated circuit (IC) whose behavior can be controlled by man through programming. This IC is composed by logical circuits interconnected in such a way that through the introduction of the states 0 or 1 with specific combinations it executes operations that lead to a specific purpose. These combinations, resulting in specific paths achieved into those interconnected logical circuits are the instructions, each one leading into a specific operation. Those instructions, succeeding each other in a predetermined sequence, are part of a program which leads the CPU to the execution of a specific task.

To deal with a CPU we need to know how it works and how we can talk to it. We already know the “01bet”, the computer’s alphabet. Now we must learn how to make words with it, phrases (instructions) with the words and finally texts (programs) with the phrases. That was precisely our purpose when we started this work. To turn easier the contact with something that is complex, very complex, but whose complexity comes from the sum of the most elemental simplicities.

So, the CPU is an interactive IC, interacting with its surrounding medium and with the man. The CPU has three essential components:

- The ALU (Arithmetic and Logic Unit).

- The Control Unit.

- The Computer Registers.

The ALU contains all the CPU’s logical and arithmetical circuits, those we have dealt with during the chapter about Logical Circuits and many more, as many complex as the CPU evolution.

The Control Unit is responsible for issuing the control signals which interconnect the circuits in the desired manner in order they can fulfill their task. It is composed basically by decoding and selecting circuits as those that we’ve seen before. Decoding the instructions which get to it in a composed shape needing to be decoded into the signals which will give rise to the main operations that the CPU is able to execute. Selecting, through MUX which trigger different paths inside the CPU according to their selection bits, the signals which reach them coming from the instructions decode.

The CPU Registers are the internal CPU’s memory, composed by Registers located inside its Core, where it temporarily stores the data and addresses it needs during the program execution. The width of these CPU Registers defines the number of bits the CPU can process (e.g. 8, 16, 32, 64 bits).

These are the inns we’ve referred when we analyzed the ETL (Edge Triggered Latches), precisely the place where the set of bits corresponding to the CPU processing width wait for each other at the end of an intense activity journey.

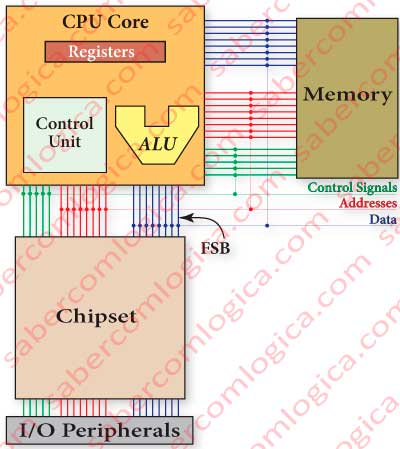

Figure 1 shows a very basic CPU composition, its closest neighbors and the connection with them, using designations that have not yet been addressed:

- The CPU’s Core .

- The Chipset (set of chips), responsible for the communication between the CPU and the computer’s peripherals.

- The Main Memory (MM) where the CPU accesses the instructions and the data of an executing program.

- The FSB (Front Side Bus) composed by the connection lines with the Chipset through which flow data, addresses and control signals.

In the architecture here depicted the communication with the MM is already done directly by the CPU chip, where the memory controller (not shown here) is contained. In most recent architectures the communication with the graphic cards is as well directly managed by the CPU chip. All these components are inserted and interconnected within the Motherboard. Something we will deal with latter on. For now it’s enough just to know it.

The CPU does the only operation that it has been programmed for a fetch, decode and execute cycle. As soon the computer is powered on, the CPU starts a fetch, decode and execute cycle to the memory address within the instruction pointer. If there’s nothing in the fetched memory address the CPU continues indefinitely its fetch, decode and execute cycle with the clock frequency to that memory address, until it’s powered off or the memory address in the instruction pointer is changed. Even when it finishes running a program the CPU continues its permanent fetch, decode and execute cycle until instructed otherwise. Instruction Pointer is the name given to the Computer Register where the next memory address to be fetched always is.

Therefore, the CPU fetchs in the memory at the address pointed by the Instruction pointer for an instruction, decodes it, executes it and repeats the cycle.

This is where we humans come in, developing programs that allow it to operate according to the desired shape. Because the CPU lives in intimate connection to memory, we will make a slight approach to it.

The Computer Memory

The Types of Memory

The computer memory is divided into three types:

- The Primary Memory, composed by the CPU Registers, the Cache and the Main Memory (MM).

- The Secondary Memory, composed by mass storage devices as the Hard Disc Drives (HDD) or the Solid State Drives (SSD) .

- The Tertiary Memory, composed by mass storage removable devices.

The difference between the secondary and tertiary memories, resides in their respective media availability:

- In the secondary one, the media is always available without the need for human intervention.

- In the tertiary there’s the need for human intervention in order to put the media into the devices.

The Primary Memory is a volatile type of memory, i.e. it looses what’s registered in it when powered off and is divided into two types, according to its construction:

- The static memory or SRAM (Static Random Access Memory), whose cells are logical circuits. This is the Registers and Cache type of memory.

- The dynamic memory or DRAM (Dinamic Random Access Memory), whose cells are capacitors that do or do not store electrical voltage, accordingly representing the logical states 1 or 0. This is the memory type used in the Main Memory (MM).

The term dynamic reflects the fact that this type of memory requires constant refreshing. Like a ruptured bucket of water, the capacitor quickly loses its charge so being necessary to constantly regain its status. To this action we call refreshing, being repeated thousands of times per second.

The term static means precisely that, it is static and doesn’t require refreshing, because its memorization is in the logical circuits output as those we have seen before (ETL for Registers and Latches for Cache), which do not depend on any storage mean but simply on a specific combination in the circuits transistors connection.

The secondary memory it’s a persistent type of memory, i.e. the data registered in it isn’t dependent on being powered, is predominantly composed by magnetic recording in the HDD. Most recently, the SSD (Solid State Drive) composed by flash memory, a static and persistent memory, is growing in use. Due to the higher cost and lower storage ability of these latter ones, large mass storage still remains a privilege of magnetic disks (HDD).

The tertiary memory a persistent memory type too, is composed by optical ways of recording, as the CD (Compact Disc), the DVD (Digital Video Disc) or the BD (Blu-ray Disk). The tertiary magnetic media is composed mainly by removable HDD, as the diskettes are virtually abandoned and the magnetic tapes, used in the backup of very large amounts of data, are being replaced by the HDD, growing in size and lowering in price.

What’s Each Memory Type For

The Main Memory (MM),

is where the instructions and data of a running program are. The MM is the great quick access storage space used by the CPU, being nowadays composed by billions of cells. We’ll define the bit, the smallest unit of storage, as being the MM’s cell which can only contain the value 1 or 0. However, because memory access is never done to quantities less than one Byte (8 bits) the Byte is some times designated as the MM cell. To avoid any confusion We define the Byte as being the content of a MM location, identifiable by its address in it. This definition is even easier to understand if we remember that the memory access is always referenced to Byte.

The MM memory type clock frequencies are a lot smaller than the CPU’s. Beyond that, it has great latencies (when looked by the CPU) between different accesses, what delays the CPU for a long time every time it needs a value from the MM.

The Cache Memory

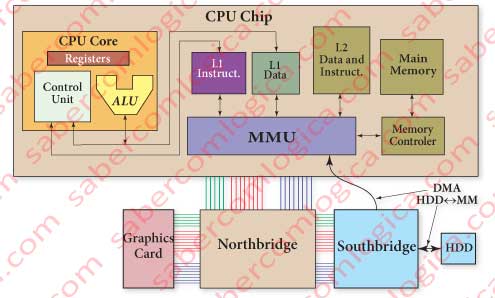

was created in order to significantly reduce this waiting time and interposed between the CPU and the MM. We will consider in this work two Cache levels, the Level 1 Cache (L1) and the Level 2 Cache (L2).

The data registered in the Caches will always be the copy of the data fetched from the MM through complex statistical algorithms existing in the MMU where the caches are loaded from. And the data registered in L1, may or may not be the copy of the data registered in L2. The CPU only knows about L1. When the CPU talks to the memory it’s with the L1 that it does.

For each time a value fetched by the CPU is found in the Cache we’ll have a Cache Hit. The Hit Racio currently achieved, according to statistical studies, exceeds the 95% and likely to improve with the latest CPU, such the complexity of the fetch algorithms, the increase in the cache size and the effort of the program compilers to fit the basic parameters that those programs follow.

Assuming for example that an access to the MM takes 50 ns and to the cache takes 2 ns, a 95% Hit ratio results in an average memory access time of 4.4 ns, what reduces the access to the memory in about 91.2%. I think it’s enough to transmit the importance of Cache in the improvement of CPU performance.

Since the construction of Intel Pentium CPU that the Cache L1 is divided into two equal blocks,

- the data cache memory and

- the instructions cache memory,

with the purpose of making the CPU believe that it has two separate memories. From L1 up the memory is only one, data and instructions together, according to the Von Neuhman architecture.

The Von Neuhman architecture, which is still used in current computers, many years ago put an end to the separated data and instruction memories. This separation generated frequent situations where it lacked space for instructions and was left over space for data, or vice versa. If no separation exists the space is always fully occupied and distributed according to the needs of data and instructions.

However the CPU performance is greatly improved by introducing the concept of Harvard architecture, which consists in the separation of the two memories. In this situation, the CPU can simultaneously access the instruction and data memories, the instructions are simplified and is avoided the competition generated at the memory access by the control unit for instructions and data.

The strategy used by computer engineering to create an L1 cache with separate instruction and data memories, followed by upper Cache levels and the MM having one only space for both data and instructions, got the best of the two worlds. The CPU, only speaking to the L1 works as if there is two separate memories, which actually are just one.

The Computer Registers,

are at the top of the memory hierarchy, composed by Registers equal to the ones we’ve seen before. They are located inside the CPU core which uses them for the temporary allocation of the data and addresses of running programs. They are always few due their location and to the huge density of transistors they have for each memory cell. It’s precisely that density that tuns economically inappropriate their use in memories of bigger sizes.

They are the tool provided to the CPU for it to organize and synchronize the informations, in order that in a given moment, the several circuits of the computer can freeze a specific bit pattern and process it.

The Secondary Memory,

persistent, is where all the programs and data necessary for the computer to work are permanently and persistently kept. This is really the great computer memory.

In Conclusion

What we’ve just described is called the computer memory hierarchy, where the memories evolve in size in an inversely proportional relation with the price and in a directly proportional relation with the access times. We give ourselves the right to conclude with a symbology:

the MM is the cache of the HDD, the Cache Memory is the cache of the MM and the Computer Registers are the CPU operating memory.

Figure 3 reflects graphically what happens inside the CPU chip. There, the memory access beyond L1 is the MMU responsibility. The MMU (Memory Management Unit) is the responsible for managing the complex process of memory access by the CPU. The way how the MMU will completely manage the CPU Memory access, the way how the CPU Chip direct connections to other components have evolved and even the way how these other components have evolved, are issues to be addressed separately with the appropriate detail in dedicated Chapters. The same goes to the MM and to the Cache.

For now, it’s the CPU that we are dealing with. So let’s keep it simple, in order its understanding becomes easier. But before we continue, two important concepts for what goes on.

The Address

The Address refers to the location in the MM where a certain value is registered.

In what would it be good for us to save a value into the MM, somewhere in one location between billions of others, without registering where? It would have been the same as not to save it.

This is the reason why the address is an integral part of a reference to a value kept in the MM. The CPU refers to those values through their addresses and not by their value.

The addressing ability of the CPU, is determined by the width of its Registers (the size of its word) and defines the largest addressable size of the MM.

In our imaginary, the MM will have its locations organized in a stack, as shown in Figure 2, each position being defined by a sequence number starting at 0.

DMA

DMA (Direct Memory Access) referred in Figure 3 is responsible for a direct communication between the HDD and the MM through the MMU. Something we’ll talk about when dealing with the Operating Systems. For now we know that the communication between the HDD and the MP can be done outside the CPU control, leaving it free for other tasks.

The communication with other memory-intensive use peripherals, such as graphic cards for example, will benefit from DMA.